Tumblr recently announced that they no longer tolerate adult content on their sites. This is problematic for artists and art historians because social media and blogging platforms have proven to be lousy at discerning nudity in art from nudity in pornography. As a result, platforms like Facebook are famously flagging important cultural art and artifacts like the Venus of Willendorf as adult content and removing them from their sites (essentially erasing our history).

While it is problematic, I can see how Facebook’s system could accidentally see the Venus, an object actually intended to represent the nude human form, as potentially being adult content. However, Tumbler’s artificial intelligence is flagging all kinds of bizarre things as adult content. And it fails to capture many things that actually do contain nudity. So if you were worried that machines and AI would eventually outsmart us, steal our jobs, and then steal our boyfriends/girlfriends, fear not. The machines are just not that into us.

Mustard Dream, Tom White, 2018

So what do AI-censoring algorithms find sexy? Well, AI’s definition of nudity is actually pretty hilarious. For example, AI artist Tom White cooked up this sexy number he calls Mustard Dream, which was immediately flagged as “adult content” by Tumbler’s AI censor.

Tom White’s Mustard Dream, flagged by Tumbler as “adult content”

Apparently Tom’s milkshake brings all the droids to the yard as Mustard Dream also also scored a near perfect score of 92.4% on AWS (Amazon Web Services) for “explicit nudity.”

Mustard Dream scores a 92.4% on AWS (Amazon Web Server) Explicit Nudity detector

It is no coincidence that White’s art is scoring so high on the various AI “adult content” filters. For several years, White has been learning to see things the way machines see them. His work combines the minimal elements required for an AI to read an object in a image. He has famously done this for objects below, which were recognized as a fan, a cello, and a tick (left to right).

Fan, Tom White, 2018

Cello, Tom White, 2018

Tick, Tom White, 2018

But now White has moved on from mundane, everyday objects and become the Hugh Hefner of AI, cornering the market for saucy AI pinups. White wondered how his robo-porn would hold up to the more sensual works of modern human artistic masters. To satisfy his curiosity, he analyzed the 20,000 screen prints that the MoMA (Museum of Modern Art) has in their collection.

Mustard Dream, Tom White, 2018

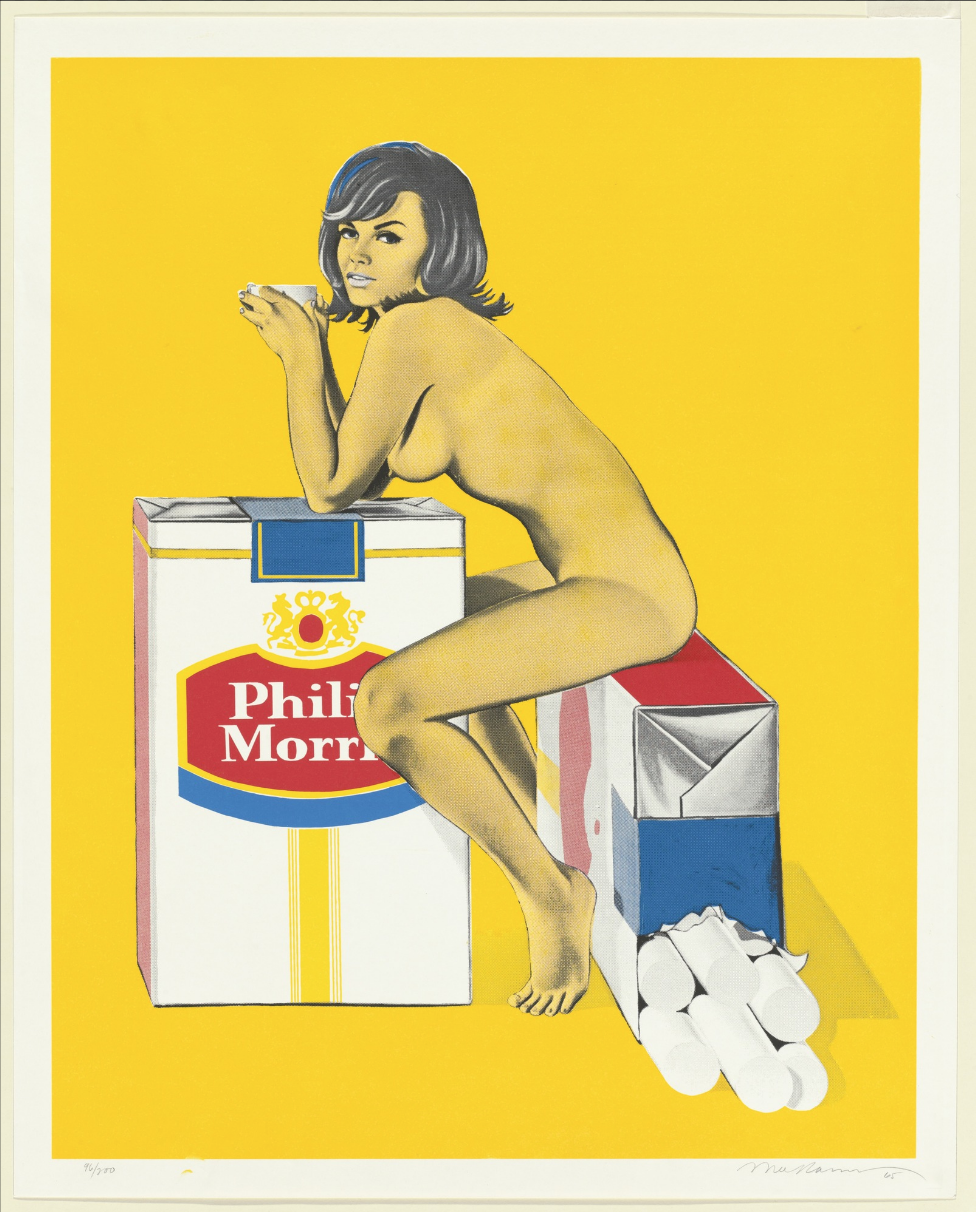

Tobacco Rose, Mel Ramos, 1965, published 1966 (image courtesy of MoMA)

It turns out White’s Mustard Dream print would be content blocked by Google and Amazon before all 20,000 prints in the MoMA. Though, according to White, “Mel Ramos Tobacco Rose had a respectable second place.”

Pitch Dream, Tom White, 2018

White shared with me that much like Mustard Dream, his Pitch Dream “also scores with high confidence values as ‘adult’ and ‘racy’ by Google SafeSearch and as ‘explicit nudity’ by Amazon and Yahoo.” I can see why, just look at those curves ;-)

But how does White create and optimize these images to titillate the AI adult content algorithms? According to White:

Given an image, a neural network can assign it to a category such as fan, baseball, or ski mask. This machine learning task is known as classification. But to teach a neural network to classify images, it must first be trained using many example images. The perception abilities of the classifier are grounded in the data set of example images used to define a particular concept.

In this work, the only source of ground truth for any drawing is this unfiltered collection of training images.

Abstract representational prints are then constructed which are able to elicit strong classifier responses in neural networks. From the point of view of trained neural network classifiers, images of these ink-on-paper prints strongly trigger the abstract concepts within the constraints of a given drawing system. This process developed is called perception engines as it uses the perception ability of trained neural networks to guide its construction process. When successful, the technique is found to generalize broadly across neural network architectures. It is also interesting to consider when these outputs do (or don’t) appear meaningful to humans. Ultimately, the collection of input training images are transformed with no human intervention into an abstract visual representation of the category represented.

In this case, the training images used were adult in nature but have become abstracted out by Tom’s perception engine in a way that makes the resulting image appear “adult” to a neural network but innocuous to a human.

Not all of the AI art being created to intentionally trigger “adult content” detectors is so wholesome. If Tom White is the Hugh Hefner of AI generated “adult content,” Mario Klingemann may just be the Larry Flynt. Where White’s images are humorous, Klingemann’s are often dark, disturbing, and unsettling.

For this series of images Klingemann is calling “eroGANous,” he intentionally evolved a generative adversarial network called “BigGAN” for “maximum NSFW-ness.” Klingemann points out, “Tumblr's filter is not happy about them, but it looks like they may still show for a few days.” The complete series can be seen here, for now.

Klingemann sees the use of AI to broadly censor content as problematic as it results in “sterile” content. As he shared with me:

When it comes to freedom, my choice will always be "freedom to" and not "freedom from," and as such I strongly oppose any kind of censorship. Unfortunately in these times, the "freedom from" proponents are gaining more and more influence in making this world a sterile, "morally clean" place in which happy consumers will not be offended by anything anymore. What a boring future to look forward to.

Luckily, the current automated censorship engines are more and more employing AI techniques to filter content. It is lucky because the same classifiers that are used to detect certain types of material can also be used to obfuscate that material in an adversarial way so that whilst humans will not see anything different, the image will not trigger those features anymore that the machine is looking for. This will of course start an arms race where the censors will have to retrain their models and harden them against these attacks and the freedom of expression forces will have to improve their obfuscation methods in return.

Klingemann also shared several other projects by artists exploring machine learning and nudity including Artist Jake Elwes’ NSFW machine learning porn, “a 12-minute looped film that records the AI’s pornographic fantasies.” On his website Elwes describes his project as:

A convolutional neural network, an AI, was trained using Yahoo’s explicit content model for identifying pornography, which learnt by being fed a database of thousands of graphic images. The neural network was then re-engineered to generate pornography from scratch.

Both Klingemann and Elwes cite Gabriel Goh’s Image Synthesis from Yahoo's open_nsfw (heads up, it’s also NSFW) as an early example exploring neural networks and nudity.

And then there are the slightly less pornographic nudes from AI artist Robbie Barrat, which where trained on hundreds of classical nude portraits from art history.

AI Generated Nude Portrait #1, Robbie Barrat, 2018

We have covered Barrat’s Nudes extensively in the past on Artnome and are proud and honored to have several of them in our digital art collection, though I would be curious to see how Barrat’s Nudes rate on the various AI-driven “adult content” scales, as well.

AI Generated Nude Portrait #3, Robbie Barrat, 2018

Of course, censorship is nothing new for artists. Marcel Duchamp famously explored machine-like nudity with his Nude Descending a Staircase in 1912. The hanging committee of the Salon des Indépendants exhibition in Paris, which included Duchamp’s own two brothers, declined the work stating “A nude never descends the stairs--a nude reclines." Some of the wispy line work in Duchamp’s nude even resemble a bit the line work from Tom White’s Mustard Dream, perhaps just one more way Duchamp was ahead of his time.

Nude Descending a Staircase, Marcel Duchamp, 1912

Even Michelangelo’s Sistine Chapel drew criticism from the Pope and Catholic Church for the dozens of nude men it depicted. It was eventually censored, with loin cloths painted onto the figures to protect the prude and the modest.

Sistine Chapel, Michelangelo, 1508

One has to wonder how long it will be before an artist like Tom White is asked to add loin cloths to works like Mustard Dream to protect the purity of thought and modesty of artificially intelligent machines. I’m not even sure what the equivalent to a loin cloth looks like to a neural network, but I’m certain that Tom White and Mario Klingemann will figure it out and can find a way around such censorship.